Calling the Squash Orchestrator from a Jenkins pipeline

Configuring a Squash Orchestrator in Jenkins

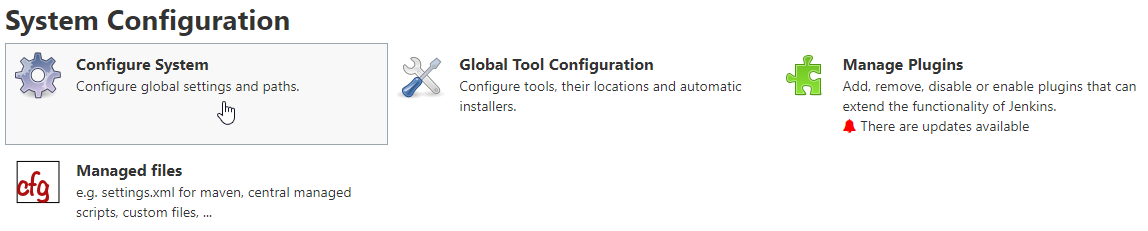

To access the configuration of the Squash Orchestrator, you first need to go the Configure System page accessible in the System Configuration space of Jenkins, through the Manage Jenkins tab:

A block named OpenTestFactory Orchestrator servers will then be available:

Server id: This ID is automatically generated and can't be modified. It is not used by the user.Server name: This name is defined by the user. It is the one that will be mentioned in the pipeline script of the workflow to be executed.Receptionist endpoint URL: The address of the receptionist micro-service of the orchestrator, with its port as defined at the launch of the orchestrator.

Please refer to the Squash Orchestrator documentation for further details.Workflow Status endpoint URL: The address of the observer micro-service of the orchestrator, with its port as defined at the launch of the orchestrator.

Please refer to the Squash Orchestrator documentation for further details.Credential: Secret text type Jenkins credential containing a JWT Token allowing authentication to the orchestrator.

Please refer to the OpenTestFactory Orchestrator documentation for further details on secure access to the orchestrator.Workflow Status poll interval: This parameter sets the interval between each update of the workflow status.Workflow creation timeout: Timeout used to wait for the workflow status to be available on the observer after reception by the receptionist.

Call to the Squash Orchestrator from a Jenkins pipeline

Once there is at least one Squash Orchestrator configured in Jenkins, it is possible to call the Squash Orchestrator from a pipeline type job in Jenkins thanks to a dedicated pipeline method.

Below is an example of a simple pipeline using the calling method to the orchestrator:

node {

stage 'Stage 1: sanity check'

echo 'OK pipelines work in the test instance'

stage 'Stage 2: steps check'

configFileProvider([configFile(

fileId: '600492a8-8312-44dc-ac18-b5d6d30857b4',

targetLocation: 'testWorkflow.json'

)]) {

def workflow_id = runOTFWorkflow(

workflowPathName:'testWorkflow.json',

workflowTimeout: '300S',

serverName:'defaultServer',

jobDepth: 2,

stepDepth: 3,

dumpOnError: true

)

echo "We just ran The Squash Orchestrator workflow $workflow_id"

}

}

The runOTFWorkflow method allows the transmission of a workflow to the orchestrator for an execution. It uses 6 parameters:

workflowPathName: The path to the file containing the workflow. In the present case, the file is injected through the Config File Provider plugin, but it is also possible to get it through other means (retrieval from a SCM, on the fly generation in a file, …).workflowTimeout: Timeout on workflow execution. This timeout will trigger if workflow execution takes longer than expected, for any reason. This aims to end stalled workflows (for example due to unreachable environments or unsupported action calls). It is to be adapted depending on the expected duration of the execution of the various tests in the workflow.serverName: Name of the Squash Orchestrator server to use. This name is defined in the OpenTestFactory Orchestrator servers space of the Jenkins configuration.jobDepth: Display depth of nested jobs logs in Jenkins output console.

This parameter is optional. The default value is 1.

The value 0 can be used to display all jobs, whatever their nested depths.stepDepth: Display depth of nested steps logs in Jenkins output console.

This parameter is optional. The default value is 1.

The value 0 can be used to display all steps, whatever their nested depths.dumpOnError: Iftrue, reprint all logs with maximum job depth and step depth when a workflow fails.

This parameter is optional. The default value istrue.

To facilitate log reading and debugging, logs from test execution environments are always displayed, regardless of the depth of the logs requested.

Passing environment variables or files

In some cases, it is necessary to pass some environment variables or some files to the workflow.

For variables, simply declare them in the environment section of the pipeline definition. These variables must be prefixed with OPENTF_. They will be usable in the workflow without this prefix.

For files, they must be declared using the fileResources parameter of the runOTFWorkflow method. This parameter consists of an array of ref elements that are each composed of two attributes:

name: corresponds to the name of the file as expected by the workflow.path: the file path within the Jenkins job workspace.

The following example executes a workflows/my_workflow_2.yaml workflow by providing it with a file file (whose content will be that of the my_data/file.xml file) and two environment variables FOO and TARGET:

pipeline {

agent any

environment {

OPENTF_FOO = 12

OPENTF_TARGET = 'https://example.com/target'

}

stages {

stage('Greetings'){

steps {

echo 'Hello!'

}

}

stage('QA') {

steps {

runOTFWorkflow(

workflowPathName: 'workflows/my_workflow_2.yaml',

workflowTimeout: '200S',

serverName: 'orchestrator',

jobDepth: 2,

stepDepth: 3,

dumpOnError: true,

fileResources: [ref('name': 'file', 'path': 'my_data/file.xml')]

)

}

}

}

}

Call for a workflow execution using inception execution environment

When the goal is to use Squash Orchestrator for execution of a workflow using inception execution environment, as described in the documentation, the pipeline must be changed according to the following template:

node {

stage 'Stage 1: sanity check'

echo 'OK pipelines work in the test instance'

stage 'Stage 2: steps check'

configFileProvider([

configFile(fileId: 'inception_workflow.yaml', targetLocation: 'testWorkflow.yaml'),

configFile(fileId: 'TEST-ForecastSuite.xml', targetLocation: 'TEST-ForecastSuite.xml')

]) {

def workflow_id = runOTFWorkflow(

workflowPathName:'testWorkflow.yaml',

workflowTimeout: '300S',

serverName:'defaultServer',

jobDepth: 2,

stepDepth: 3,

dumpOnError: true,

fileResources: [ref('name':'myfile','path':'TEST-ForecastSuite.xml')]

)

echo "We just ran The Squash Orchestrator workflow $workflow_id"

}

}

The fileResources parameter is used to indicate the files to be transmitted to the orchestrator, these files corresponding to those entered in the files section of the workflow. This parameter must be defined as indicated in the previous paragraph.

Backward compatibility with the squash-devops.hpi plugin

To support its transition to open-source, this plugin is changing its name to version 1.3.1. We go from squash-devops-1.3.0.hpi to opentestfactory-orchestrator-1.3.1.hpi.

In order to facilitate the transition for users, the following backward compatibility is ensured in version 1.3.1:

-

If you uninstall the squash-devops.hpi plugin and install, the opentestfactory-orchestrator.hpi plugin, your servers installed with the legacy plugin will be restored.

-

Pipelines using the

runSquashWorkflowmethod are still supported. However, this method is deprecated and will no longer be supported in future versions; you should use now therunOTFWorkflowmethod instead. -

Environment variables using the

SQUASHTF_prefix are still supported. However, they are deprecated and will no longer be supported in future versions, you should use now theOPENTF_prefix instead.

This backward compatibility is still ensured for the current version: opentestfactory-orchestrator-2.1.0.hpi.